Building a Question-Answering System(different use cases)

An information Retrieval system specially QNA is very ambitious and costly model to build and maintain. But Using some data compression technique like distillation is very useful to make model light weight and fast. Here we are going to discuss all aspect of QNA model, Different technique to make a QNA model, problem faced while building QNA model, and an introduction code to a QNA model using BERT.

What is a Question Answering System ?

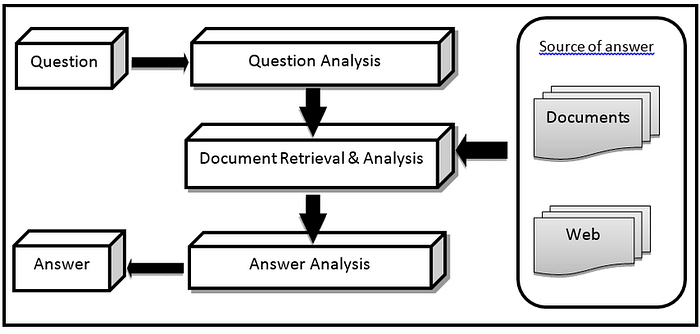

Question Answering (QA) system is an extractive information retrieval system in which a direct answer is expected in response to a submitted query. It uses a context, which contains the answer, a trained model on QNA task and a question that has to be answered.

Why and Where is Need of QNA systems ?

There are two type of information retrieval systems.

1. On similarity basis(Search Engines)

2. The true fact/Exact extraction based answer(Domains where we want to find out historical facts Legal, Medical etc.)

Here we are going to talk about second point.A question answering implementation, may construct its answers by querying a structured database of knowledge or information, usually a knowledge base. More commonly, question answering systems can pull answers from an unstructured collection of natural language documents.

Things you know before making a QNA system :

- How to Measure Language models performance

- How to prepare QNA labelled data

- What is Transfer Learning in NLP

- What is Distillation technique

- Tensorflow/Pytorch

How to Measure Language models performance

There are two popular ways to measure the strength of a language model :

a. Perplexity

b. mask word prediction

a. Perplexity :

Perplexity is a metric used to judge how good a language model is

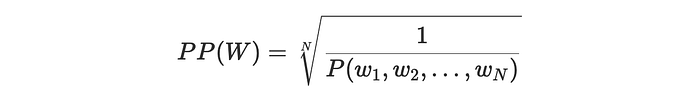

- We can define perplexity as the inverse probability of the test set, normalized by the number of words:

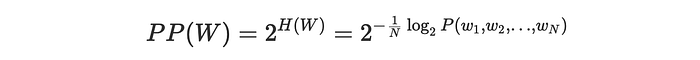

- We can alternatively define perplexity by using the cross-entropy, where the cross-entropy indicates the average number of bits needed to encode one word, and perplexity is the number of words that can be encoded with those bits:

- We can interpret perplexity as the weighted branching factor. If we have a perplexity of 50, it means that whenever the model is trying to guess the next word it is as confused as if it had to pick between 50 words.

b. Mask word prediction :

As we are using Masked Language model(BERT) architecture, we can check our models performance by making some words from a sentence and then prediction those words using language model.

EX : income tax [MASK] (where [MASK] token returns) that makes the complete string “income tax returns”

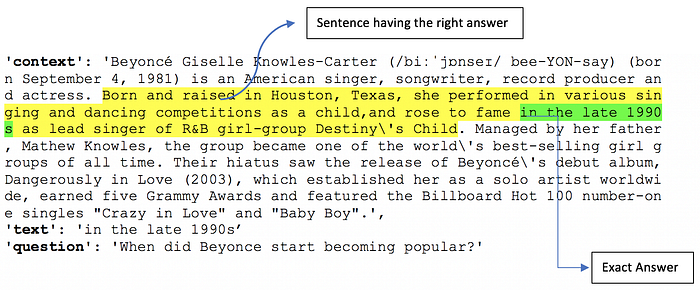

Preparation format QNA labelled data :

Data will be in form of dictionaries

dict_keys(['input_ids', 'token_type_ids', 'attention_mask', 'start_positions', 'end_positions'])Where “input_ids” combines the context and question token encodings

“attention_mask” is attention of input_ids

“start_positions” is the start index of answer in context

“end_positions” is the end index of answer in context

“token_type_ids” This is enough for some models to understand where one sequence ends and where another begins. However, other models, such as BERT, also deploy token type IDs (also called segment IDs). They are represented as a binary mask identifying the two types of sequence in the model.

What are Different ways we can make a QNA system

- We can make our own QNA model from scratch.

- Or we have to take a language model and add your question answer layer on top of that and train the Language model on domain labelled data.

- Make your own language model from scratch for your domain data and then train on labelled data.

- Take a pre-trained language model and add some layers to make another language model using domain data and then add a QNA layer to train new language model using labelled data.

Different Problems we face while making a QNA system

Getting language understanding of domain data is very hard. Whatever language models are available they are generic. We need some Language model that represent our domain and then we can fine tune that domain language model for QNA.

- Amount of domain Labelled data

- Representative domain data for domain language model fine tuning

- most of language models comes with 512 token as max sequence length but in real life scenario context sequences can be very big.

- Problem of domain vocabulary in the pre-trained model.

- Very hard to implement incremental learning in this case

- Its hard to get considerable amount of labelled domain data.

- Longer training time, Huge Cpu and Gpu cost, lots of steps involved, not that great accuracy

Bit of implementation code

Following are step for training Custom Question Answer Model using pre-trained model like “bert-base-uncased” :

* Data Preparation

Prepare data of QnA in form of list of Context, Question and Answer (note that the contexts here are repeated since there are multiple questions per context) The contexts and questions are just strings. The answers are dicts containing the subsequence of the passage with the correct answer as well as an integer indicating the character at which the answer begins. In order to train a model on this data we need

(1) the tokenized context/question pairs, and

(2) integers indicating at which token positions the answer begins and ends.* Feature Vectorizer

Now, Tokenize (Encode) Context / Question Pair with transformer (DistilBertTokenizerFast) and convert our character start/end positions to token start/end positions using Tokenizer only.* Model Training

DistilBert model with a QA head for training

* Model Saving

Save of Model in binary format.

Get The squad labelled data

import requests

train = requests.get(‘https://rajpurkar.github.io/SQuAD-explorer/dataset/train-v2.0.json')

test = requests.get(‘https://rajpurkar.github.io/SQuAD-explorer/dataset/dev-v2.0.json')

with open(‘squad/train.json’,’wb’) as f:

f.write(train.content)

with open(‘squad/test.json’,’wb’) as f:

f.write(test.content)

Data Preparation

check data preparation step here :

https://github.com/huggingface/notebooks/blob/master/examples/question_answering.ipynb

Feature Vectorizer

from transformers import BertTokenizerFast, BertTokenizer

tokenizer = BertTokenizerFast.from_pretrained(‘QNA_model’, return_token_type_ids = True)

train_encodings = tokenizer(train_contexts, train_questions, add_special_tokens = True,truncation=True, padding=True,)

val_encodings = tokenizer(val_contexts, val_questions, truncation=True, padding=True)

def add_token_positions(encodings, answers):

counter = 0

start_positions = []

end_positions = []

for i in range(len(answers)):

if(encodings.char_to_token(i, answers[i][‘answer_start’]) == None):

counter+=1

start_positions.append(encodings.char_to_token(i, answers[i][‘answer_start’]))

end_positions.append(encodings.char_to_token(i, answers[i][‘answer_end’]))

if start_positions[-1] is None:

start_positions[-1] = tokenizer.model_max_length

points to the space before the correct token → add + 1

if end_positions[-1] is None:

end_positions[-1] = encodings.char_to_token(i, answers[i][‘answer_end’] + 1

encodings.update({‘start_positions’: start_positions, ‘end_positions’: end_positions})

add_token_positions(train_encodings, train_answers)

add_token_positions(val_encodings, val_answers)

import torch

class SquadDataset(torch.utils.data.Dataset):

def __init__(self, encodings):

self.encodings = encodings

def __getitem__(self, idx):

return {key: torch.tensor(val[idx]) for key, val in self.encodings.items()}

def __len__(self):

return len(self.encodings.input_ids)

train_dataset = SquadDataset(train_encodings)

val_dataset = SquadDataset(val_encodings)

Model Training

from transformers import BertForQuestionAnswering

model = BertForQuestionAnswering.from_pretrained(“QNA_model”)

from transformers import DistilBertForQuestionAnswering, Trainer, TrainingArguments

training_args = TrainingArguments(do_eval = True,

output_dir=’./results’, # output directory

num_train_epochs=3, # total number of training epochs

per_device_train_batch_size=8, # batch size per device during training

per_device_eval_batch_size=8, # batch size for evaluation

warmup_steps=500, # number of warmup steps for learning rate scheduler

weight_decay=0.01, # strength of weight decay

logging_dir=’./logs’, # directory for storing logs

logging_steps=10,

save_steps=2500,

eval_steps=100)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=val_dataset )

trainer.train()

Save model

model.save_pretrained(‘QNA_model’)

I have experimented All of the above explained things.

Conclusion :

A QNA model needs a lot of resources like Huge GPU if you want to train a good amount of labelled data and a good language model and also it should run faster. Considering all the above it’s not easy to put a production ready fast predictor QNA model. Its like bigger model give better accuracy but cost more time to predict. But with distilization technique we can put a good and fast performing model to production.

Do give suggestion for improvement

Below are code links for all the above concepts.

References :

QNA from scratch : https://colab.research.google.com/github/huggingface/blog/blob/master/notebooks/01_how_to_train.ipynb#scrollTo=ltXgXyCbAJLY

Domain Language model+QNA Layer : https://github.com/huggingface/notebooks/blob/master/examples/question_answering.ipynb

Domain model from scratch : https://colab.research.google.com/github/huggingface/blog/blob/master/notebooks/01_how_to_train.ipynb#scrollTo=ltXgXyCbAJLY

Fine tune language model on domain data to get domain language model : https://colab.research.google.com/github/Novetta/adaptnlp/blob/master/tutorials/Finetuning%20and%20Training%20(Advanced)/Fine-tuning%20Language%20Model.ipynb#scrollTo=gXZorNL_ETWd